clmtrackr.js

Javascript library for fitting facial models to faces in images and video

Library Reference

clmtrackr is a javascript library for fitting facial models to faces in images and video, and can be used for getting precise positions of facial features in an image, or precisely tracking faces in video.

Watch this example of clmtrackr tracking a face in the talking face video:

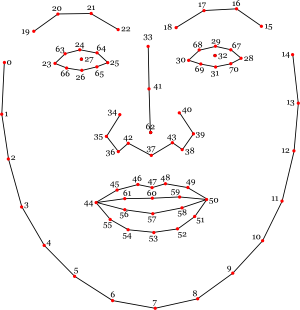

The facial models included in the library follow this annotation:

Once started, clmtrackr will try to detect a face on the given element. If a face is found, clmtrackr will start to fit the facial model, and the positions can be returned via getCurrentPosition().

The fitting algorithm is based on a paper by Jason Saragih & Simon Lucey. The models are trained on annotated data from the MUCT database plus some self-annotated images.

Basic usage

Initialization:

var ctracker = new clm.tracker();

ctracker.init();Starting tracking:

ctracker.start(videoElement);Getting the points of the currently fitted model:

var positions = ctracker.getCurrentPosition();Drawing the currently fitted model on a given canvas:

var drawCanvas = document.getElementsById('somecanvas');

ctracker.draw(drawCanvas);Functions

These are the functions that the clm.tracker object exposes:

- init( model ) : initialize clmtrackr.

- model : (optional) a model to use for tracking. If no model is specified, the built-in model from 'model_pca_20_svm.js' will be used.

- start( element, box ) : start the fitting/tracker. Returns

falseif the tracker hasn't been initalized with a model. - element : a canvas or video element

- box : (optional) the bounding box of where the face is, as an array

[x, y, width, height]wherexandyrefer to the coordinates of the top left corner of the bounding box. If no bounding box is given, clmtrackr tries to detect the position of the face itself. - stop( ) : stop the running tracker.

- track( element, box ) : do a single iteration of model fitting. Returns the current positions of the fitted model as an array of positions

[[x0, y0], ... , [xn, yn]]if tracking iteration succeeds. Returnsfalsewhen the model is currently not tracking a face, e.g. during inital face detection or if tracking has been lost. - element : a canvas or video element

- box : (optional) the bounding box of where the face is, as an array

[x, y, width, height]wherexandyrefer to to the coordinates of the top left corner of the bounding box. If no bounding box is given, clmtrackr uses the last known position, or tries to detect the position of the face. - reset( ) : reset the tracking. This will re-initialize detection and initial fitting.

- draw( canvas, pv, path ) : draw the currently fitted facial model

- canvas : the canvas element to draw the model on

- pv : (optional) the model parameters as an array. (default is to use the current parameter values)

- path : (optional) type of path to draw, either "normal" or "vertices" (default : normal)

- getScore( ) : Get the current score of the model fitting. The score is based on a SVM classifier which detects how strongly the image precisely under the fitted model resembles a face. Returned values range from 0 (no fit) to 1 (perfect fit). The default threshold for assuming we've lost track of the face is anything below 0.50.

- getCurrentPosition( ) : Get the current positions of the fitted facial model. Returns the positions as an array

[[x0, y0], ... , [xn, yn]]. - getCurrentParameters( ) : Get the current parameters for the fitted facial model. Returns the model parameters as an array

[p0, p1, ... , pn] - getConvergence( ) : Get the mean model movements (summed over all points) over the last 10 iterations. A number below 0.5 signifies the model probably has converged.

- setResponseMode( type, list ) : Set how the responses are calculated (see below).

- type : the method of response calculations, either "single", "cycle" or "blend"

- list : an array of response filter strings, either "raw", "sobel" or "lbp", for instance ["raw", "lbp"]. When type is "single" clmtrackr will only use the first element in the array. When type is "cycle", clmtrackr will cycle through the array, using one of the types for each iteration. When type is "blend", clmtrackr will blend all the different types of responses in the array.

Responses

When trying to fit the model, we calculate the likelihood of where the true points are in a region around each point. These likelihoods are called the responses. Clmtrackr includes three different types of responses: "raw", which is based on SVM regression of the grayscale patches, "sobel", which is based on SVM regression of the sobel gradients of the patches, which means it's more sensitive to edges, and "lbp", which is based on SVM regression of local binary patterns calculated from the patches. The type "raw" is the fastest way to calculate responses, since it doesn't do any preprocessing of the patches, but may be slightly less precise than "lbp" or "sobel". By default, clmtrackr only uses the "raw" type of response, but it is possible to change to the other types of responses to increase precision, by the function setResponseMode above.

Additionally, there are also methods to try to combine the different types of responses. By default, clmtrackr only uses one type of response, but you can try to improve tracking by either blending or cycling different types of responses. When blending different types of responses, clmtrackr will calculate all the given types of responses in the array list, and average these responses. Since we're then calculating multiple responses per iteration, this will lead to slower tracking. If you're cycling different types of responses, clmtrackr will cycle through the list of responses in the array "list", but only calculate one type for each iteration. This means tracking will not be much slower than using single responses, but you may experience that the fitted model "jitters" due to disagreement between the different types of responses.

Try out the different response modes in this example

Parameters

When initializing the object clm.tracker, you can optionally specify some object parameters, for instance:

var ctracker = new clm.tracker({searchWindow : 15, stopOnConvergence : true});The optional object parameters that can be passed along to clm.tracker() are :

- constantVelocity {boolean} : whether to use constant velocity model when fitting (default is true)

- searchWindow {number} : the size of the searchwindow around each point (default is 11)

- useWebGL {boolean} : whether to use webGL if it is available (default is true)

- scoreThreshold {number} : threshold for when to assume we've lost tracking (default is 0.50)

- stopOnConvergence {boolean} : whether to stop tracking when the fitting has converged (default is false)

- faceDetection {object} : object with parameters for facedetection :

- useWebWorkers {boolean} : whether to use web workers for face detection (default is true)

Models

There are several pre-built models included. The models will be loaded with the variable name pModel, so initialization of the tracker with any of the models can be called this way:

ctracker.init(pModel);All of the models are trained on the same dataset, and follow the same annotation as above. The difference between them is in type of classifier, number of components in the facial model, and how the components were extracted (Sparse PCA or PCA). If no model is specified on initialization, clmtrackr will use the built-in model from model_pca_20_svm.js as a default choice.

A model with fewer components will be slightly faster, with some loss of precision. The MOSSE filter classifiers will run faster than SVM kernels on computers without support for webGL, but has slightly poorer fitting.

- model_pca_20_svm.js : SVM kernel for classifiers, 20 components PCA (the default model included in clmtrackr.js)

- model_pca_10_svm.js : SVM kernel for classifiers, 10 components PCA

- model_spca_20_svm.js : SVM kernel for classifiers, 20 components Sparse PCA

- model_spca_10_svm.js : SVM kernel for classifiers, 10 components Sparse PCA

- model_pca_20_mosse.js : MOSSE filter for classifiers, 20 components PCA

- model_pca_10_mosse.js : MOSSE filter for classifiers, 10 components PCA

Files

- js/clm.js : main library

- js/svmfilter/svmfilter_webgl.js : classifier library for SVM, webGL version

- js/svmfilter/svmfilter_fft.js : classifier library for SVM, non-webGL version

- js/mossefilter/mosseFilterResponses.js : classifier library for MOSSE correlation filters

- js/facedetector/faceDetection.js : facedetection library for initial detection, wrapping jsfeat and mosse.

- js/facedetector/faceDetection_worker.js : web worker wrapper for facedetection.

- build/clmtrackr.js : packaged version of the above files plus dependencies

- build/clmtrackr.min.js : packaged and minified version

- build/clmtrackr.module.js : packaged version, as an ES6 module

Utility libraries

face_deformer.js is a small library for deforming a face from an image or video, and output it on a webgl canvas. This is used in some of the examples.

Example usage:

var fd = new faceDeformer();

// initialize the facedeformer with the webgl canvas to draw on

fd.init(webGLCanvas);

// load the image element where the face should be copied from

// along with the position of the face

fd.load(imageElement, points, model);

// draw the deformed face on the webgl canvas

fd.draw(points);These are the functions that the faceDeformer object exposes:

- init( canvas ) : initialize the face deformer with a webGL canvas.

- canvas : a webgl canvas element

- load( element, points, model ) : load the face to deform from an image, video or canvas element.

- element : a canvas, image or video element

- points : the position of the face on the element, according to the face model above, as an array of positions

[[x0, y0], ... , [xn, yn]]. - draw( points ) : draw the deformed face on the webgl canvas.

- points : the new points to deform the face to, as an array of positions

[[x0, y0], ... , [xn, yn]]. - drawGrid( points ) : draw the grid of the vertices which are used to deform the face on the webgl canvas.

- points : the new points to deform the face to, as an array of positions

[[x0, y0], ... , [xn, yn]]. - clear( ) : clear the webgl canvas.

License

clmtrackr is distributed under the MIT license